EasyAR Motion Fusion¶

What is EasyAR Motion Fusion¶

EasyAR motion fusion is a technic to optimize image and object tracking when a motion tracking device is present.

If a device (mobile phones, eyewears, or headsets) has VIO capability through any hardware or software solution, we call it a motion tracking device. Such devices include but not limited to devices which can run EasyAR motion tracking, ARCore, ARKit, Huawei AR Engine, or VIO-capable eyewears like Nreal Light.

EasyAR motion fusion makes image and object tracking stable and jitter-free, and they can be tracked even when the image or object goes out of camera scope.

How to Use EasyAR Motion Fusion¶

A scene with and without motion fusion differs in both EasyAR AR Session and target. Like what is described in Start from Zero, you always have two ways of creating AR Session.

Create AR Session Using Presets¶

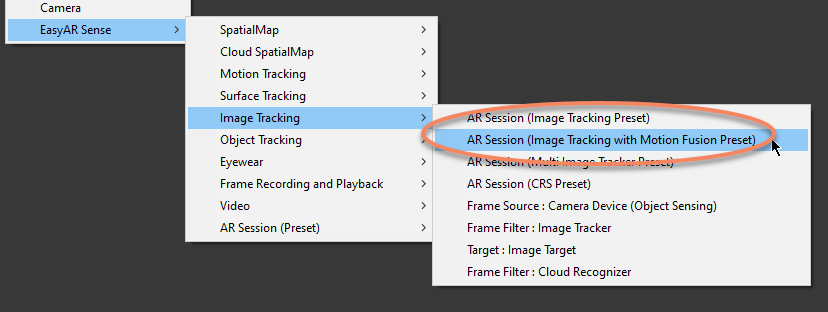

You can create motion fusion AR Session using presets with Motion Fusion in the name from Image Tracking and Object Tracking,

Create AR Session Node-by-Node¶

You can also create motion fusion AR Session Node-by-Node.

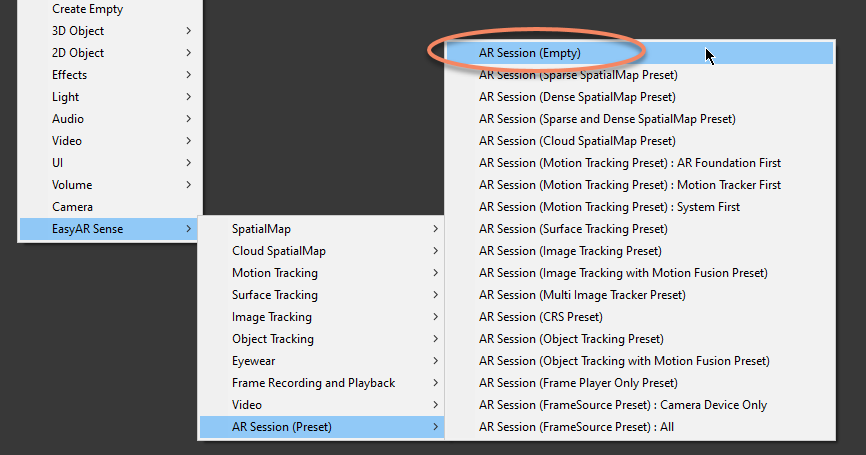

First create an empty ARSession by EasyAR Sense > AR Session (Preset) > AR Session (Empty),

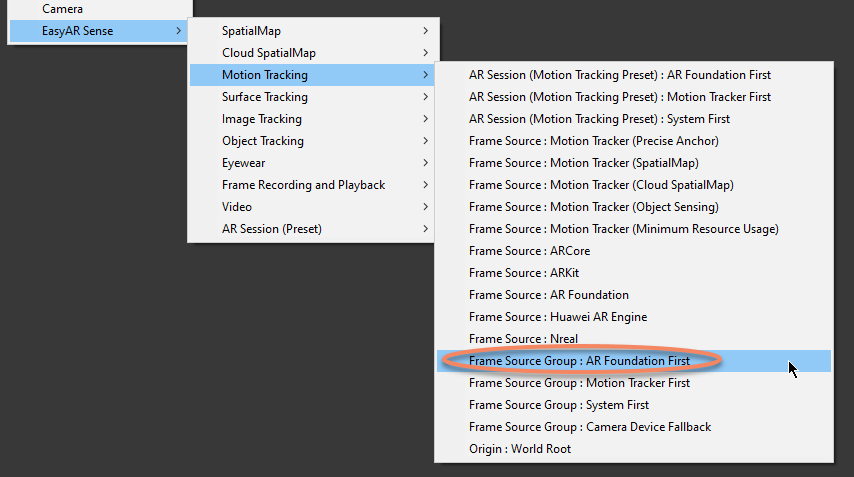

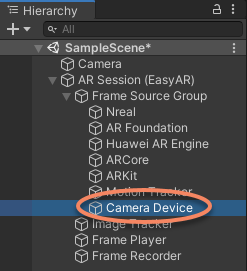

Then add FrameSource in the session. To use motion fusion, you will need a FrameSource which represents a motion tracking device, so usually you will need different frame sources on different devices. Here we create a Frame Source Group by EasyAR Sense > Motion Tracking > Frame Source Group : AR Foundation First, the frame source used by the session will be selected at runtime. You can add different frame source groups or only one frame source to the session according to you needs.

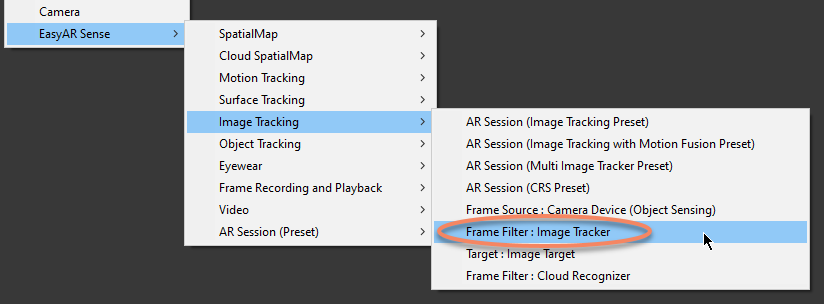

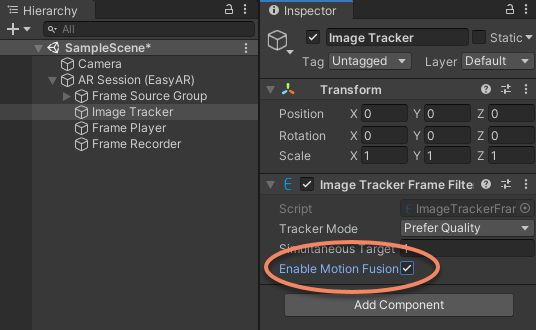

After adding frame source, you need to add frame filters used by the session, add a ImageTrackerFrameFilter in the session, using EasyAR Sense > Image Tracking > Frame Filter : Image Tracker,

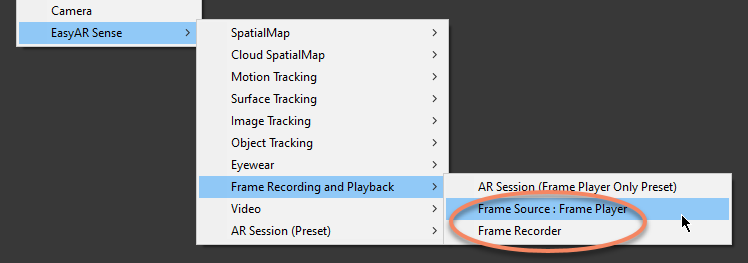

Sometimes you want to record input frames on device and playback on PC for diagnose in Unity Editor, then you can add FramePlayer and FrameRecorder in the session. (You need to change FrameSource.FramePlayer or FrameSource.FrameRecorder to use them.)

Then turn on motion fusion in the created ImageTrackerFrameFilter

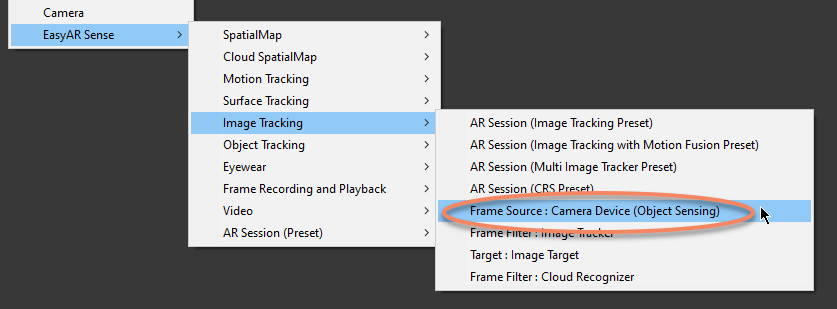

If you want the tracking to fallback to normal tracking when motion tracking device not available, you can simply add a new CameraDeviceFrameSource to the end of frame source group using EasyAR Sense > Image Tracking > Frame Source : Camera Device (Object Sensing).

Target Limitation¶

There are two points to be noticed when using motion fusion,

The target scale (ImageTarget.scale and ObjectTarget.scale, or TargetController input ImageTargetController.ImageFileSourceData.Scale and ObjectTargetController.ObjFileSourceData.Scale) must match the object scale in real world.

The target image or object cannot move in real world.

You can reference sample ImageTracking_MotionFusion for more details.